Research |

3.1 Motivation for Pattern Recognition

|

|

Giving a computer or robot the ability to see

in any way is a very important and exciting project. As technology has moved

forward, this has become more and more possible, the technology required to

achieve this no longer requires huge machines with massive processing power,

and can quite easily be run on a desktop PC. Clearly it would not be of any

use to imitate the human recognition process, we would find it difficult to

understand how this process works, let alone emulate it.

Pattern recognition is a field of study in

its own right it ranges from earthquake shock waves, through patterns in a

sound wave, patterns in the stars to patterns in statistics of population.

This project focuses entirely on the subject of image recognition, starting

off very simply (a triangle or a square) and working up to more difficult

structures (time permitting). The first studies involving pattern

recognition were aimed at optical character recognition.

Pattern recognition is a very large area of

study, and has a lot to do with automated decision-making. Typically a set

of data about a given situation is available (usually a set of numbers

arranged as a tuple or vector), based on this data a machine should be able

to make some kind of informed decision about what it should do. An example

of this is a burglar alarm; the system must make a decision (intruder, no

intruder) – this decision will be based on a set of radar, acoustic and

electrical measurements.

The most general view of pattern recognition

theory is shown in figure 3.1

Figure 3.1 - General view of Pattern Recognition

Images are what the world revolves around,

our entire environment is made up of thousands upon thousands of images; it

is therefore not surprising that pattern recognition should become involved

in this subject. Recognition of an image can mean many different things,

because of this there is no single theory on the best way to recognise an

image, this has caused a tendency for any developer working on a particular

problem in this field to invent their own methods which yield the best

possible results for their situation, hence there is no standard solution to

recognising images.

|

3.2 Image Processing Theory

|

| All images have to be in some way processed

before any type of recognition can even be attempted. |

3.2.1 Colours

A 2-D image is an arrangement of colours

within a finite border.1 Things are simplified within this by

considering that three monochrome images (each showing red, green and blue

content) make up one full colour image. Any three independent colours can be

used for this process, but red, green and blue are most commonly used

because they are used to make images in a standard colour display. Any

colour can be made up from a triple of numbers (r,g,b), this is shown in a

colour cube (fig.3.2).

Figure 3.2 – A Colour Cube

It has often been proven that a monochrome

image holds as much information as a colour image. It is obvious to us by

observation that a recognisable object will be easily identified, both in

black and white, and colour, but is it possible for a computer to achieve

the same results?

Another term for a black and white monochrome

image is a grey level image because each point in the image is assigned a

numerical value of how bright or grey it is. Mathematically a monochrome

image can be considered to be a two dimensional array of numbers, each point

can be represented by a function z = f(x,y). The numerical value is bounded

by the maximum and minimum brightness available in the image.

|

3.3 Image Recognition Theory

|

3.3.1 Features and Feature Extraction

|

|

The main principles of feature extraction are

to do some processing on the image to derive some data, which contains

enough information to discriminate between other objects and classify the

current image whilst removing all irrelevant details (such as noise). Put

simply feature extraction should:

- Find descriptive and discriminating feature(s).

- Find as few as possible of them – to aid classification.

This leads to an updated view of pattern recognition to that shown in

figure 3.1, this is shown in figure 3.3, below.

Figure 3.3 - Updated view of Pattern Recognition |

| |

3.3.2 Classification

|

|

The job of the classifier is to identify, based

on the data of the data vector (y), which class x belongs to. This may

involve looking for the most similarity between x and any image stored in

the database, or just checking to see whether the same pattern of edges

appears in both images.

|

3.3.3 Edge Detection

|

|

A local feature is a subset of pixels at a

particular location within an image which form a recognisable object in

their own right.1 In the context of this project local feature

extraction, should be enough to distinguish between one object and another

object. The theory of edge detection goes well beyond the scope of what can

be covered within this project, suggested reading on the theory of edge

detection can be found in Pattern Recognition, by James. The Java Advanced

Imaging API (section 3.5.2) has built in methods for edge detection. More

details on the can be found in the online documentation for Java Advanced

Imaging on the Sun Microsystems website4.

|

3.4 Other Methods

|

|

With image and pattern recognition being such

a wide field of study, it will come as no surprise to anybody that many

different methods exist for image recognition, these range from the already

discussed feature detection, to creating histograms, calculating the mean

brightness and other frequency methods1, using image segmentation1

these are all readily accepted methods but in this project edge detection

will be the main point of focus. New methods are always coming along (e.g.

Eigenfaces, MIT’s latest method of face recognition– section 3.6.4).

|

3.5 Java and Image Recognition

|

3.5.1 Java2

|

|

JDBC (which is

a trademark, not an acronym; although often thought of as “Java Database

Connectivity”) is a set of Java classes and interfaces for executing SQL

statements, which provide developers with a standard method for connecting

to databases. With JDBC database commands can be sent to any relational

database. Java’s property of write once, run anywhere allows many machines

of different types (Macintosh, PC’s running Microsoft Windows or Unix) to

connect to the same database across any network, either the Internet or an

intranet.

In it’s simplest form JDBC does three things.

- Connects to a database.

- Sends an SQL Statement

- Processes the results.

Below is a brief code fragment, which

demonstrates this.

Connection con = DriverManager.getConnection ("jdbc:odbc:wombat", "login",

"password");

Statement stmt = con.createStatement();

ResultSet rs = stmt.executeQuery("SELECT a, b, c FROM Table1");

while

(rs.next())

{

int x = rs.getInt("a");

String s = rs.getString("b");

float f = rs.getFloat("c");

}

In the original specification for this project (including networking –

Appendix A) a three-tier database structure would have been appropriate

(figure 3.4), after the update to the project it is more appropriately

modelled with a two-tier database structure (figure 3.5).

|

|

Figure 3.4 – Three Tier Database Structure

|

|

Figure 3.5 – Two Tier Database Structure

|

|

A three-tier architecture (Figure 3.4) allows commands to

be sent to a middle tier, which then makes the connection and SQL calls to

the database. This approach is particularly appealing to programmers because

it allows updates to be made to the server without it affecting the client

applet or application (so long as the function calls remain the same).

|

3.5.3 Java Edge Detection

|

|

Figure 3.6 – An example of Java Edge Detection

|

| 3.5.3.1 Java

Advanced Imaging API |

|

Sun Microsystems describe their Advanced Imaging API as a

product that allows sophisticated high performance image processing

functionality to broaden the Java platform and be incorporated in Java

applets and applications.

Java Advanced Imaging supports Network Imaging (via

Remote Method Invocation or Internet Imaging Protocol) and has an extensible

framework to allow developers to plugin customised solutions and algorithms.

Over 80 optimised image-processing operations, across

various image types are supported alongside a wide range of variables

required whilst working with images. Capabilities are also provided to

mix/overlay graphics and images together.

Many image-processing techniques are achieved by use of

spatial filters, which operate over a local region surrounding a pixel in an

image. The most commonly used spatial filtering technique is convolution

(the dictionary defines “convolution” as a twisting, coiling or winding

together). Convolution is an operation between two images, the smaller image

is called the kernel of the convolution; this is a weighted sum of the area

surrounding an input pixel. The kernel processes each pixel within the image

in turn, and multiplies the pixel value and kernel together and sums the

result. The area taken into account (the region of support) is the area of

the kernel that is non-zero. Lyon6 discusses the maths of

convolution in far more detail.

Java Advanced Imaging has many functions for edge

detection, using a variety of different kernels. An edge is defined as an

abrupt image frequency change in a relatively small area of an image. These

frequency changes normally occur at the boundaries of objects – the

amplitude of the object changes to that of another object or it’s

surroundings (see figure 3.6).

The

GradientMagnitude

operation (within the Advanced Imaging API) is an edge detector; it

computes the magnitude of the image gradient vector in two orthogonal

directions4. This is done by use of a spatial filter, which can

detect a specific pixel brightness slope within a group of pixels. A steep

brightness slope will indicate the presence of an edge.

This allows edges to be defined, which make an image

easier for the computer to use by identifying only pixels that have a large

magnitude gradient.

The

GradientMagnitude operation performs two convolutions on the original

image, detecting edges in one direction then again in the orthogonal

direction. These two methods create two intermediate images. All pixel

values in the two intermediate images are then squared, creating a further

two images. The square root of these final two images is taken, creating the

final image. Using these methods for edge detection it is possible to use a

variety of different kernels (or gradient masks) to detect edges in an image

in different ways.

Even with all of these image analysis methods readily in

place within the Java Advanced Imaging API, the decision was made not to use

them. After considerable testing and research into the JAI, I found it to be

quite slow at times, and with no source code available (being a native

implementation), it would be difficult to find ways to speed this up. Also

this API is still very much in its early stages, during this project version

1.0 has been upgraded to 1.1, but as yet very little example code or books

on the subject are available (other than the Sun documentation).

|

|

3.5.3.2 Morphological

Filtering |

|

Edge detection can be achieved by convolution

or by morphological filtering. Morphological filtering is far more accurate

as it produces an edge that is only a single pixel-width wide. Morphological

filters manipulate the shape of an object and work better for binary or

grey-level images.

Morphological filtering is like convolution,

except that it uses sets as opposed to multiplication and addition. The

centre of a kernel is moved around the image one pixel at a time and a set

operation is performed on every pixel that overlaps this kernel. This is

best performed on a grey image using a kernel of odd dimensions (so that the

centre can be found).

To find the inside edges of an object using

morphological filtering it is necessary to use an erosion filter (to find

the outside edge a dilation filter is required), this reduces the size of an

object by eroding the boundary of it (a dilation filter would add to the

boundary) and subtract the image from itself.

Figure 3.7 – Two Dimensional integer space

At this point it useful to discuss some set theory6, for

images we must restrict the sets to point sets in a two-dimensional integer

space, Z2 (figure 3.7).

Figure 3.8 – Point Translation

Each element in A is a two-dimensional point

consisting of integer co-ordinates. If the points contained in A were

drawn they would show an image. It is possible to translate a point set by

another point x (figure 3.8).

After defining a structuring element B (which can

be used to measure the structure of A) the equation shown in 3.8 is

used to translate B inside of A, thus creating a new set C.

C consists of a translation of the elements in A by the

elements in B.

Figure 3.9 – Set Erosion

Thus erosion is defined as (figure 3.9):

In computing terms this is

achieved by eroding the boundaries of each object:

-

For

every pixel in the image

-

Store in the current pixel the minimum value of all the

pixels surrounding it. (During erosion all the surrounding pixels carry an

equal weighting, using the symmetrical structuring element shown in figure

3.10).

Figure3.10 – Symmetric Structuring Element

To reduce the image further (instead of just being areas

of black and white) to single lines around an object an outlining filter

must be used, this is quite simply done by subtracting the image from itself

to create the inside contour (figure 3.11).

Figure3.11 – Outlining Filter

Subtracting the arrays of data obtained via this method from themselves

results in a black image, with white edges of objects (an example is shown

in figures 3.12 & 3.13).

|

|

Figure 3.12 – Input Image

|

Figure 3.13 – Image after subtraction and erosion

|

3.5.4 Java Image Recognition

|

|

The black image with white edges obtained via

the methods described in section 3.5.3.2 can then be used to generate

polygons of all the white pixels touching each other in the image. This

polygon can then attempt to be mapped onto images retrieved from a database.

The images retrieved from the database will have been edge detected (and had

their edges widened to allow for some degree of error) before any type of

mapping takes place. The percentage of points that map correctly from one

image to the other indicates the percentage accuracy of the match.

|

3.6 Existing Work

|

3.6.1

Java Image Processing over the Internet10

|

|

Dongyang Wang and Bo Lin under the supervision of Dr Jun

Zhang have developed an applet in pure java that is capable of many

image-processing tasks, from edge detection to fast fourier transforms. This

applet is very powerful, but does not perform any kind of image recognition,

just analysis.

|

3.6.2

Human Face Detection in Visual Scenes11

|

|

Henry A.

Rowley, Shumeet Baluja and Takeo Kanade have produced a neural network based

face detection system. This system examines small windows of an image and

decides whether each window contains a face.

|

3.6.3 Neatvision8

|

|

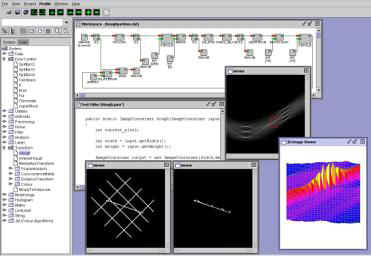

Neatvision (previously known as JVision) is a Java based

image analysis and software development environment, which gives users

access to a wide variety of image processing algorithms through their own

GUI. It allows for automatic code generation and error feedback, with

support for Java AWT, Java 2D and Java Advanced Imaging API. A sample screen

shot is shown in figure 3.14.

Figure 3.14 – Neatvision Programming Environment

|

3.6.4 Photobook/Eigenfaces - MIT9

|

|

This method of facial recognition has been developed by

MIT (Massachusetts Institute of Technology) and has been proven to be 95%

accurate even with wide variations of facial expression and glasses etc. and

was able to process 7,652 faces in less than a second when trying to find a

match. This rapid search time is achieved because each face is described as

a very small number of eigenvector coefficients. The eigenspace method does

not use template matching but calculates a “distance-from-feature-space”,

essentially a feature map is created of the distances between facial

features, and then these maps are compared (see figures 3.15 & 3.16). |

|

Figure 3.15 – Screenshot of photobook

|

|

Figure 3.16 – Feature Distances |